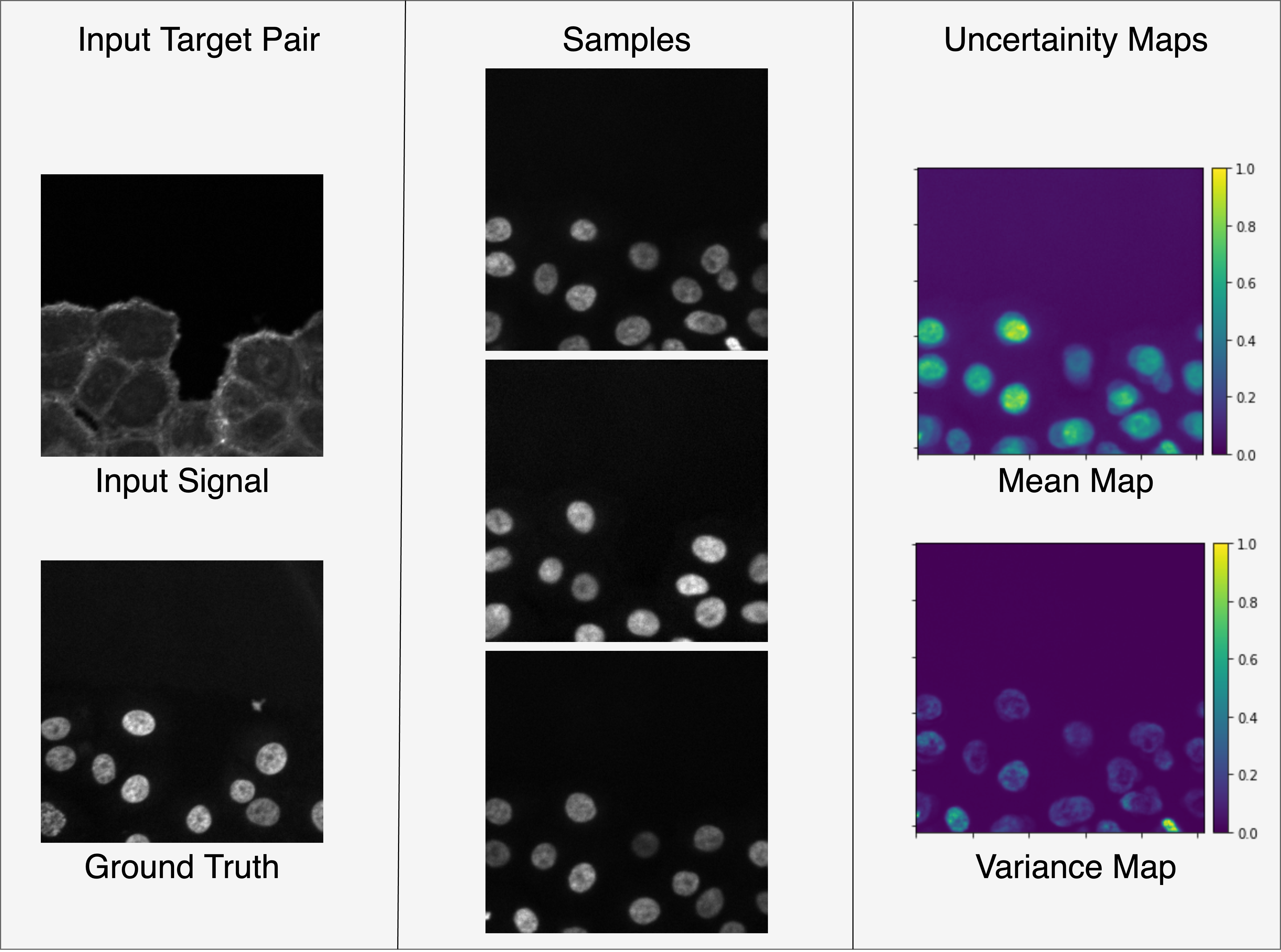

Fluorescence microscopy have many application, especially in healthcare field. But they are often expensive, time-consuming, and damaging to cells. So, a potential solution is to use transmitted light image which is relatively low cost to obtain and is label/dye free. But it lacks clear and specific contrast between different structures. So, my work explored the use of diffusion models to translate from transmitted light image to fluorescence image. Over traditional method, like U-net, my approach is also able to produce variance maps since I'm trying to predict the entire target image distribution.

Diffusion Sampling Output - Example -Transmitted Light Image to Fluorescent Target(TOM20 labeled with Alexa Fluor 594):

Dataset credit: Spahn, C., & Heilemann, M. (2020). ZeroCostDL4Mic - Label-free prediction (fnet) example training and test dataset (Version v2) [Data set]. Zenodo. https://doi.org/10.5281/zenodo.3748967

| Input Transmitted Light Image | Ground Truth Fluorescent Target (TOM20) | Diffusion Model Sampling Process |

|---|---|---|

|

|

|

|

|

|

|

|

|

| Input Conditional Signal (lifeact-RFP) | Ground Truth Target (sir-DNA) | Diffusion Model Sampling Process |

|---|---|---|

|

|

|

|

|

|

|

|

|

https://drive.google.com/file/d/15_wCXFuqHkFsNnH8OVUgwUu_2FYEw33r/view?usp=sharing

https://docs.google.com/presentation/d/1GJT3Eeq-3QbhA5H54fTH7MeN7_pI-xaNqsKhT9L33c8/edit?usp=sharing

Credits:

- Prafulla Dhariwal, and Alex Nichol 2021. Diffusion Models Beat GANs on Image Synthesis. CoRR, abs/2105.05233. (https://arxiv.org/abs/2105.05233) And source code found in: https://github.com/openai/guided-diffusion

- https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix - For the discriminator network architecture

- Weng, Lilian. (Jul 2021). What are diffusion models? Lil’Log. https://lilianweng.github.io/posts/2021-07-11-diffusion-models/

- Also many thanks to my supervisor Dr.Iain Styles(https://www.cs.bham.ac.uk/~ibs/) without whom this work wouldn't be done. His constant assistance and guidance at every stage of the research was immensely helpful. Also thanks to Dr. Carl Wilding (https://uk.linkedin.com/in/carl-wilding-4048a5101) for providing constructive feedback on my project.

For complete credits please refer my report. For any issues please feel free to contact me, and i'll try to respond.