-

Notifications

You must be signed in to change notification settings - Fork 2

Installing Rocks6.2 cluster with Open vSwitch Roll

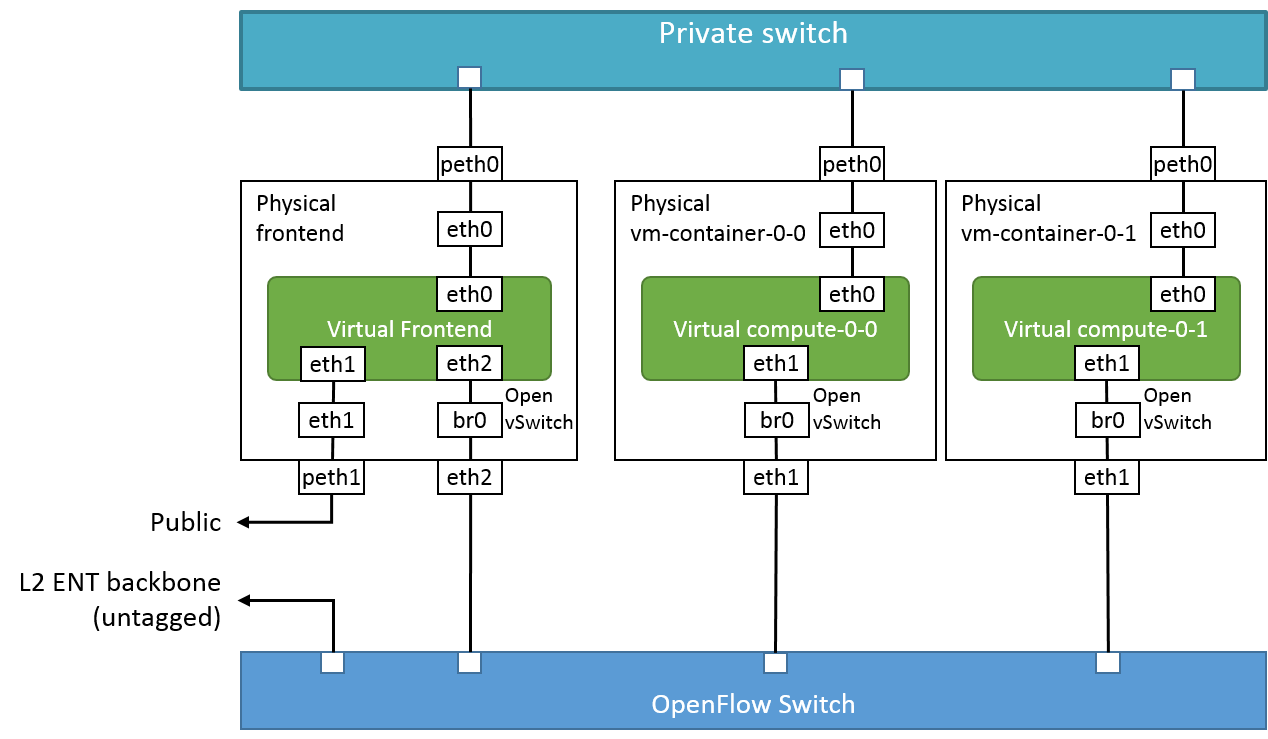

Rocks cluster requires at least two network interfaces on the frontend node (one for private, the other for public) and one network interface on the other vm-container nodes (for private). But, we recommend you to have one more additional interface on each node (for OpenFlow data plane). The additional interface can be connected to an OpenFlow switch and bridge the connection to VMs through Open vSwitch.

Install your Rocks 6.2 cluster. Currently, Open vSwitch Roll is only available on Rocks 6.2. For the installation of the Rocks 6.2, see the document of Rocks: http://central6.rocksclusters.org/roll-documentation/base/6.2/

KVM Roll is recommended to be installed to host virtual machines to be connected to ENT.

Login the physical frontend of Rocks6.2 cluster as a root, and follow the following command lines to build the Open vSwitch Roll.

mkdir /export/home/repositories

cd /export/home/repositories

git clone https://github.com/rocksclusters/openvswitch.git

cd openvswitch

make roll

These commands will create Open vSwitch Roll, openvswitch-2.3.1-0.x86_64.disk1.iso.

Install the Open vSwitch Roll by the following commands. On a frontend:

rocks add roll openvswitch-2.3.1-0.x86_64.disk1.iso

rocks enable roll openvswitch

cd /export/rocks/install

rocks create distro

Confirm the output of the following command.

rocks run roll openvswitch

If the output of the above command is fine, run the following command.

rocks run roll openvswitch | sh

Check if the RPMs are installed properly.

# rpm -qa | grep openvswitch

openvswitch-2.3.1-1.x86_64

kmod-openvswitch-2.3.1-1.el6.x86_64

roll-openvswitch-usersguide-2.3.1-0.x86_64

openvswitch-command-plugins-1-3.x86_64

If the RPMs are not installed, run the following:

cd /export/home/repositories/openvswitch/RPMS/x86_64

rpm -ivh *.rpm

For vm-containers, run the following command on the frontend:

rocks run host vm-container "yum clean all; yum install openvswitch kmod-openvswitch openvswitch-command-plugins"

Another option for vm-containers:

rocks run roll openvswitch > /share/apps/add-openswitch

rocks run host vm-container "bash /share/apps/add-openswitch"

To support Open vSwitch Roll, Base Roll needs to be updated.

cd /export/home/repositories

git clone https://github.com/rocksclusters/base.git

cd base

git checkout rocks-6.2-ovs

cd src/rocks-pylib

make rpm

yum update ../../RPMS/noarch/rocks-pylib-6.2-2.noarch.rpm

To add this RPM on vm-containers:

cp ../../RPMS/noarch/rocks-pylib-6.2-2.noarch.rpm /export/rocks/install/contrib/6.2/x86_64/RPMS/

(cd /export/rocks/install; rocks create distro)

rocks run host vm-container "yum clean all; yum update rocks-pylib"

The above commands will add RPMs and the vm-containers do not need to be reinstalled. But if no VMs are running one can reinstall the nodes. Repeat the following command for each worker node.

rocks set host boot vm-container-0-0 action=install

ssh vm-container-0-0 "shutdown -r now"

Run the following command on the frontend. The address specified in the command will not be used. But, you should assign an unique IP range.

rocks add network openflow subnet=192.168.0.0 netmask=255.255.255.0

Add a bridge device on frontend node. <frontend> needs to be replaced with your cluster name. tcp:xxx.xxx.xxx.xxx:xxxx needs to be replaced with your OpenFlow controller address and port.

rocks add host interface <frontend> br0 subnet=openflow module=ovs-bridge

rocks set host interface options <frontend> br0 options='set-fail-mode $DEVICE secure -- set bridge $DEVICE protocol=OpenFlow10 -- set-controller $DEVICE tcp:xxx.xxx.xxx.xxx:xxxx'

Add bridge devices on the vm-container nodes.

rocks add host interface vm-container-0-0 br0 subnet=openflow module=ovs-bridge

rocks set host interface options vm-container-0-0 br0 options='set-fail-mode $DEVICE secure -- set bridge $DEVICE protocol=OpenFlow10 -- set-controller $DEVICE tcp:xxx.xxx.xxx.xxx:xxxx'

rocks add host interface vm-container-0-1 br0 subnet=openflow module=ovs-bridge

rocks set host interface options vm-container-0-1 br0 options='set-fail-mode $DEVICE secure -- set bridge $DEVICE protocol=OpenFlow10 -- set-controller $DEVICE tcp:xxx.xxx.xxx.xxx:xxxx'

...

(repeat for all vm-container nodes)

If the physical network device you want to add the host has not appeared yet on the rocks list host interface, run the following commands. eth2 and eth1 needs to be replaced the network devices you want to connect. In the example, eth2 is used on the frontend and eth1 is used for the other vm-container nodes.

rocks add host interface <frontend> eth2 subnet=openflow module=ovs-link

rocks set host interface options <frontend> eth2 options=nobridge

rocks add host interface vm-container-0-0 eth1 subnet=openflow module=ovs-link

rocks set host interface options vm-container-0-0 eth1 options=nobridge

rocks add host interface vm-container-0-1 eth1 subnet=openflow module=ovs-link

rocks set host interface options vm-container-0-1 eth1 options=nobridge

...

(repeat for all vm-container nodes)

If the physical network devices have already appeared on the rocks list host interface, run the following commands.

rocks set host interface module <frontend> eth2 ovs-link

rocks set host interface subnet <frontend> eth2 openflow

rocks set host interface options <frontend> eth2 options=nobridge

rocks set host interface module vm-container-0-0 eth1 ovs-link

rocks set host interface subnet vm-container-0-0 eth1 openflow

rocks set host interface options vm-container-0-0 eth1 options=nobridge

rocks set host interface module vm-container-0-1 eth1 ovs-link

rocks set host interface subnet vm-container-0-1 eth1 openflow

rocks set host interface options vm-container-0-1 eth1 options=nobridge

...

(repeat for all vm-container nodes)

If you also found peth2 and peth1 which are generated for KVM bridge, you need to remove them. DO NOT remove the interfaces of pethX actually used for KVM. Just remove the corresponding interface of pethX which you would like to add for the Open vSwtich. (In the above example, peth2 on frontend, peth1 on vm-containers)

On frontend: (removing peth2)

rm /etc/sysconfig/network-scripts/ifcfg-peth2

vi /etc/udev/rules.d/70-persistent-net.rules

(Edit 70-persistent-net.rules and remove lines for peth2)

reboot

On vm-contaienrs: (removing peth1)

rm /etc/sysconfig/network-scripts/ifcfg-peth1

vi /etc/udev/rules.d/70-persistent-net.rules

(Edit 70-persistent-net.rules and remove lines for peth1)

reboot

Confirm the network configuration.

rocks report host interface <frontend>

Then, run the sync command.

rocks sync host network <frontend>

Repeat for the vm-containers.

rocks report host interface vm-container-0-0

rocks sync host network vm-container-0-0

rocks report host interface vm-container-0-1

rocks sync host network vm-container-0-1

...

If you would like to your virtual cluster to join the OpenFlow network, run these commands. These commands add another network interface to the VMs. We recommend to leave default public and private network as it is. Please remember you need to shutdown the virtual cluster before running the following commands.

rocks add host interface frontend-0-0-0 ovs subnet=openflow mac=`rocks report vm nextmac`

rocks add host interface hosted-vm-0-0-0 ovs subnet=openflow mac=`rocks report vm nextmac`

rocks add host interface hosted-vm-0-1-0 ovs subnet=openflow mac=`rocks report vm nextmac`

...

rocks sync config frontend-0-0-0

rocks sync config hosted-vm-0-0-0

rocks sync config hosted-vm-0-1-0

...

- Home

- How to participate

- Projects

- People

- Publications

- [Application Documentation] (https://github.com/pragmagrid/pragma_ent/wiki/Application-Development)

- [Infrastructure Documentation] (https://github.com/pragmagrid/pragma_ent/wiki/Infrastructure-Development)