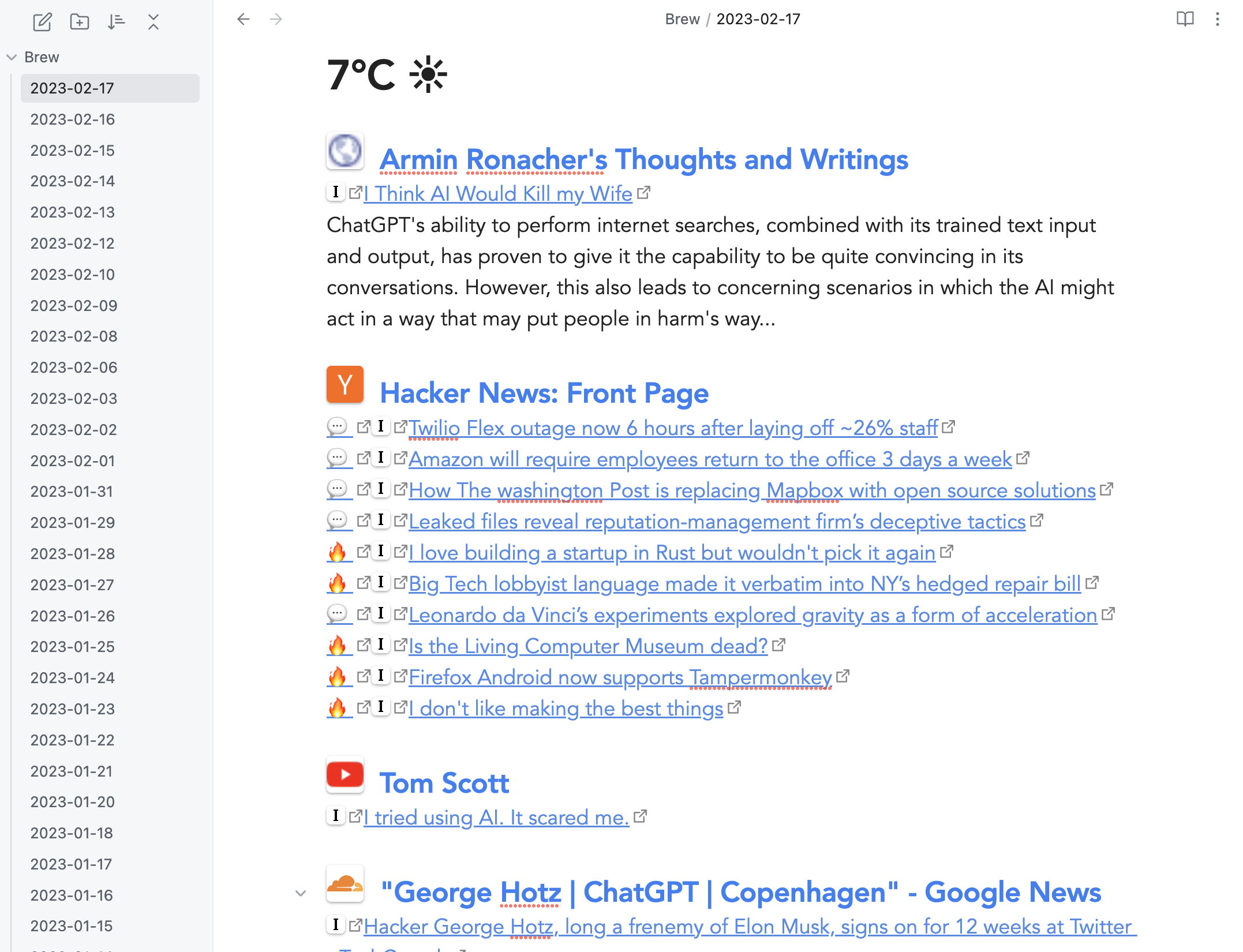

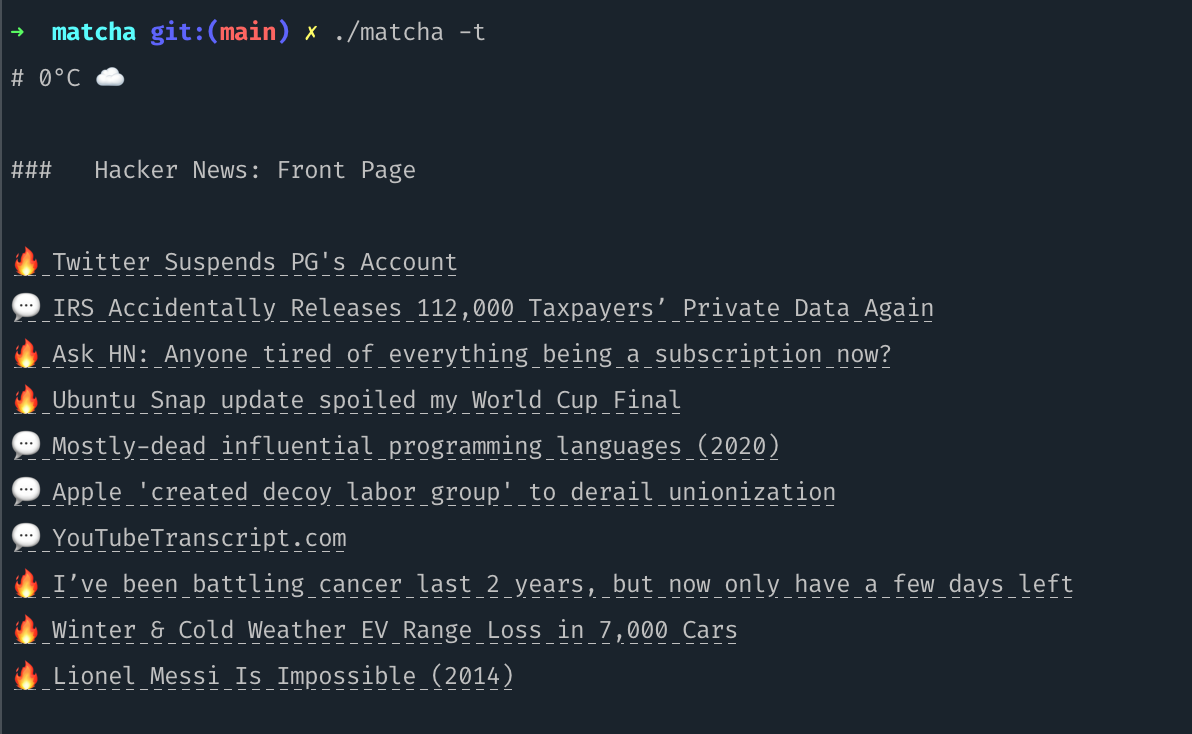

Matcha is a daily digest generator for your RSS feeds and interested topics/keywords. By using any markdown file viewer (such as Obsidian) or directly from terminal (-t option), you can read your RSS articles whenever you want at your pace, thus avoiding FOMO throughout the day.

- RSS daily digest, it will show only articles not previously generated

- Optional summary of articles from OpenAI for selected feeds

- Weather for the next 12 Hours (from YR)

- Quick bookmarking of articles to Instapaper

- Interested Topics/Keywords to follow (through Google News)

- Hacker News comments direct link and distinguishing mostly dicussed posts 🔥

- Terminal Mode by calling

./matcha -t

- Since Matcha generates markdown, any markdown reader should do the job. Currently it has been tested on Obsidian so you need a markdown reader before moving on, unless you will use terminal mode (-t option), then a markdown reader is not needed.

- Download the corresponding binary based on your OS and after executing (if on mac/linux run

chmod +x matcha-darwin-amd64to make it executable), a sampleconfig.ymlwill be generated, which you can add in your rss feeds, keywords and themarkdown_dir_pathwhere you want the markdown files to be generated (if left empty, it will generate the daily digest on current dir). - You can either execute matcha on-demand (a terminal alias) or set a cron to run matcha as often as you want. Even if you set it to execute every hour, matcha will still generate daily digests, one file per day, and will add more articles to it if new articles are published throughout the day.

Note to Go developers: You can also install matcha using go install github.com/piqoni/matcha@latest

On first execution, Matcha will generate the following config.yaml and a markdown file on the same directory as the application. Change the 'feeds' to your actual RSS feeds, and google_news_keywords to the keywords you are interested in. And if you want to change where the markdown files are generated, set the full directory path in markdown_dir_path.

markdown_dir_path:

feeds:

- http://hnrss.org/best 10

- https://waitbutwhy.com/feed

- http://tonsky.me/blog/atom.xml

- http://www.joelonsoftware.com/rss.xml

- https://www.youtube.com/feeds/videos.xml?channel_id=UCHnyfMqiRRG1u-2MsSQLbXA

google_news_keywords: George Hotz,ChatGPT,Copenhagen

instapaper: true

weather_latitude: 37.77

weather_longitude: 122.41

terminal_mode: false

opml_file_path:

markdown_file_prefix:

markdown_file_suffix:

reading_time: false

sunrise_sunset: false

openai_api_key:

openai_base_url:

openai_model:

summary_feeds:In order to use the summarization feature, you'll first need to set up an OpenAI account. If you haven't already done so, you can sign up here. Once registered, you'll need to acquire an OpenAI API key which can be found here.

Alternatively, you may use LocalAI (see the "LocalAI Support" section below for more information).

Next, update the configuration file with the desired feeds you want to summarize. This can be done under the "summary_feeds" section. Here is an example configuration:

openai_api_key: sk-xxxxxxxxxxxxxxxxx

summary_feeds:

- http://hnrss.org/best 10Replace sk-xxxxxxxxxxxxxxxxx with your OpenAI API key and include the RSS feeds under summary_feeds for articles you're interested in summarizing.

You can also customize which model you use for summarization by changing the openai_model to one of the values here which defaults to 'gpt-3.5-turbo' for now. 'gpt-4' is also a valid model name.

openai_model: gpt-3.5-turboFor those interested in using LocalAI for summarization, whether for cost-efficiency or privacy reasons, you'll first need to set it up and run it. For setup instructions, please visit the LocalAI repository on GitHub here.

After setting up LocalAI, you'll need to direct Matcha to the openai-compatible base URL of LocalAI. This is done by updating the "openai_base_url" in the configuration file. For instance, if your LocalAI server is running locally on port 8080, your configuration would look like this:

openai_base_url: http://localhost:8080/v1

openai_model: openllama-3bIn this case, 'http://localhost:8080/v1' represents the base URL where your LocalAI server is running. 'openai_model' could be any model compatible with LocalAI. You can also replace the openai_base_url with another hosted url like the Azure openai endpoint. Please note in case of errors that you may need to change the openai_model to match the model you downloaded in LocalAI.

Run matcha with --help option to see current cli options:

-c filepath

Config file path (if you want to override the current directory config.yaml)

-o filepath

OPML file path to append feeds from opml files

-t Run Matcha in Terminal Mode, no markdown files will be created

To use OPML files (exported from other services), rename your file to config.opml and leave it in the directory where matcha is located. The other option is to run the command with -o option pointing to the opml filepath.