pip install gguf-node

py -m gguf_node

Please select:

- download the full pack

- clone the node only

Enter your choice (1 to 2): _

opt 1 to download the compressed comfy pack (7z), decompress it, and run the .bat file striaght (idiot option)

opt 2 to clone the gguf repo to the current directory (either navigate to ./ComfyUI/custom_nodes first or drag and drop there after the clone)

alternatively, you could execute the git clone command to perform that task (see below):

- navigate to

./ComfyUI/custom_nodes - clone the gguf repo to that folder by

git clone https://github.com/calcuis/gguf

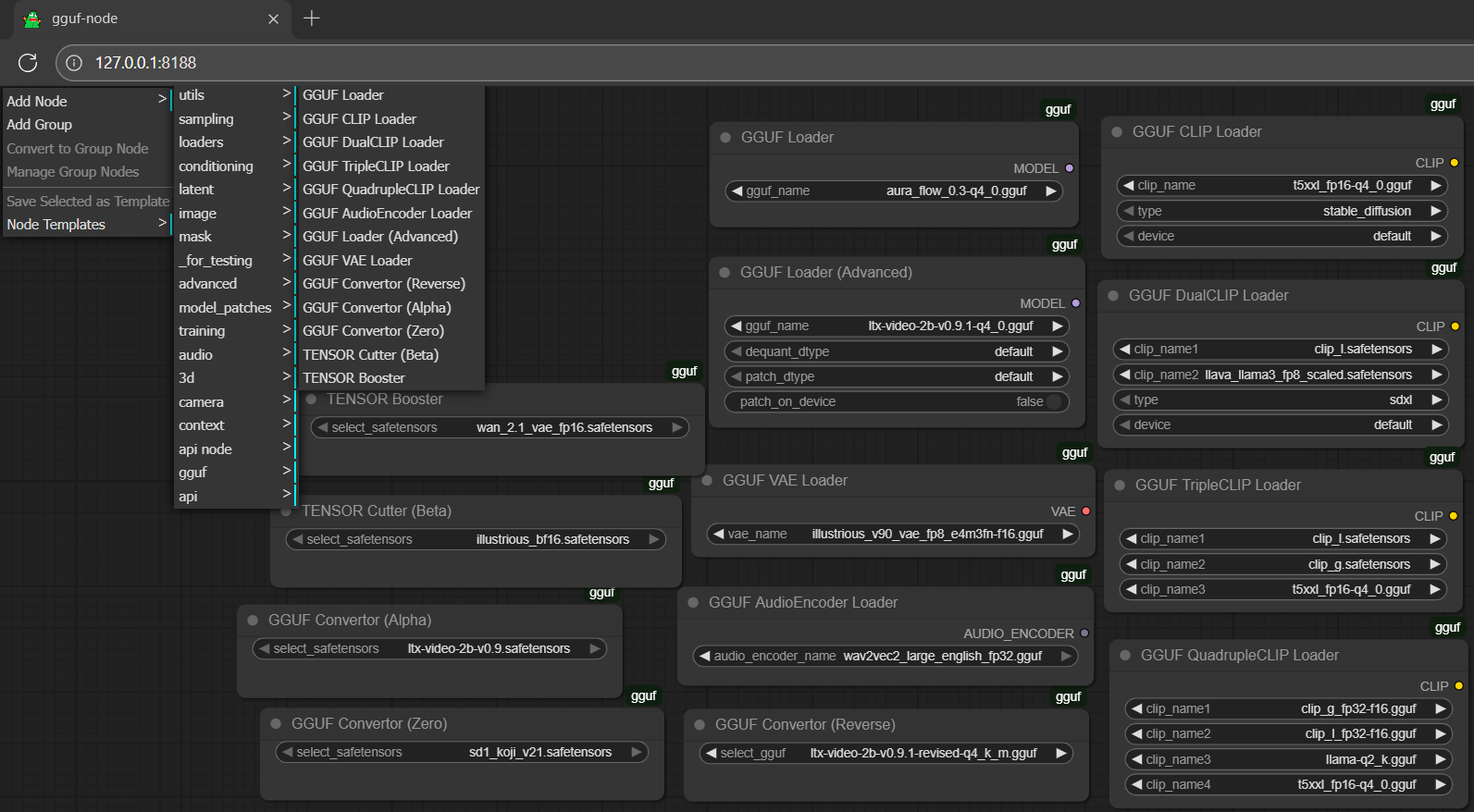

same operation for the standalone pack; then you should be able to see it under Add Node >

check the dropdown menu for

check the dropdown menu for gguf

🐷🐷📄 for the latest update, gguf-connector deployment copy is now attached to the node itself; don't need to clone it to site-packages; and, as the default setting in comfyui is sufficient; no dependencies needed right away 🙌 no extra step anymore

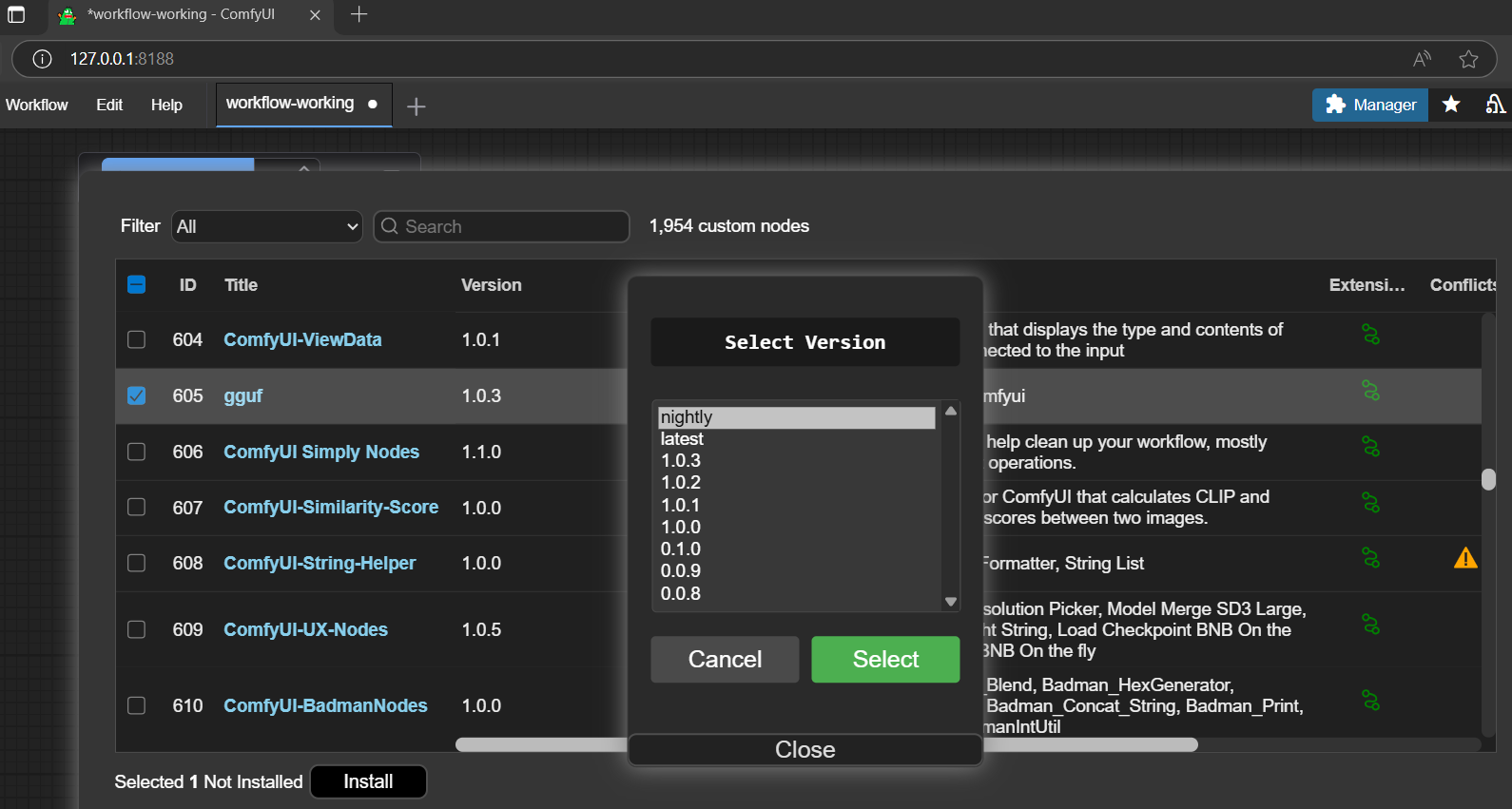

you are also welcome to get the node through other available channels, i.e., comfy-cli, comfyui-manager (search gguf from the bar; and opt to install it there should be fine; see picture below), etc.

gguf node is no conflict with the popular comfyui-gguf node (can coexist; and this project actually inspired by it; built upon its code base; we are here honor their developers' contribution; we all appreciate their great work truly; then you could test our version and their version both; or mixing up use, switch in between freely, all for your own purpose and need); and is more lightweight (no dependencies needed), more functions (i.e., built-in tensor cutter, gguf convertor, etc.), compatible with the latest version numpy and other updated libraries come with comfyui

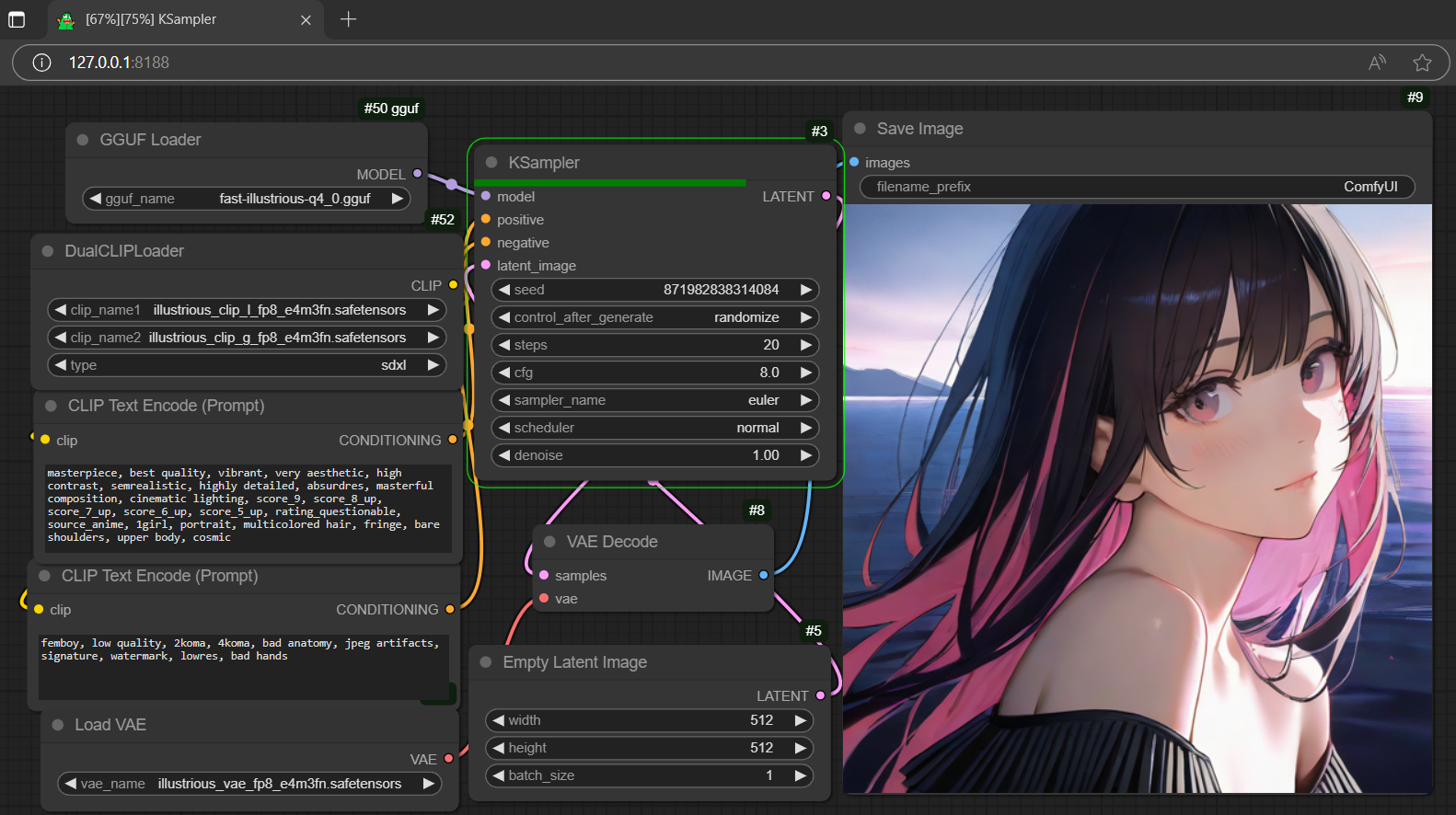

for the demo workflow (picture) above, you could get the test model gguf here, test it whether you can generate the similar outcome or not

for the demo workflow (picture) above, you could get the test model gguf here, test it whether you can generate the similar outcome or not

- drag gguf file(s) to diffusion_models folder (./ComfyUI/models/diffusion_models)

- drag clip or encoder(s) to text_encoders folder (./ComfyUI/models/text_encoders)

- drag controlnet adapter(s), if any, to controlnet folder (./ComfyUI/models/controlnet)

- drag lora adapter(s), if any, to loras folder (./ComfyUI/models/loras)

- drag vae decoder(s) to vae folder (./ComfyUI/models/vae)

- drag the workflow json file to the activated browser; or

- drag any generated output file (i.e., picture, video, etc.; which contains the workflow metadata) to the activated browser

- design your own prompt; or

- generate a random prompt/descriptor by the simulator (though it might not be applicable for all)

- drag safetensors file(s) to diffusion_models folder (./ComfyUI/models/diffusion_models)

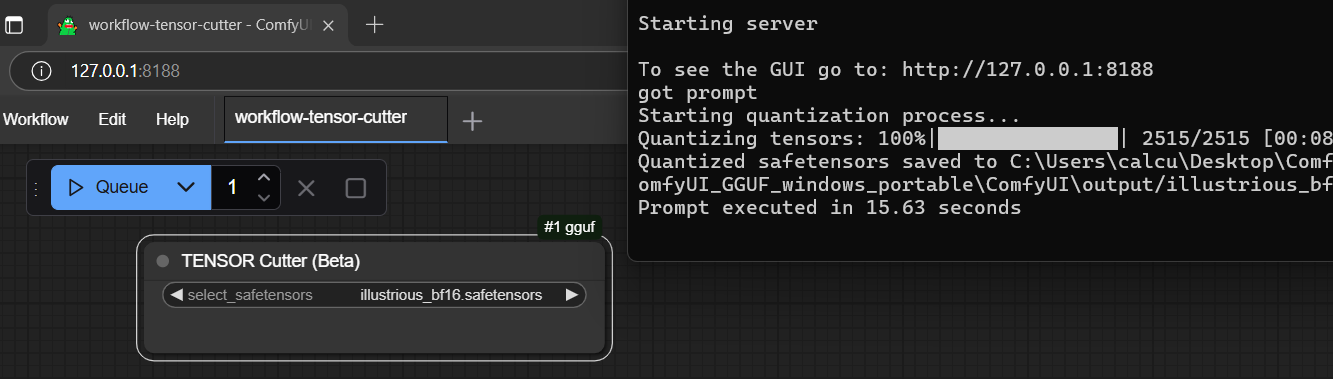

- choose the last option from the gguf menu:

TENSOR Cutter (Beta) - select your safetensors model inside the box; don't need to connect anything; it works independently

- click

Queue(run); then you can simply check the processing progress from console - when it was done; the quantized/half-cut safetensors file will be saved to the output folder (./ComfyUI/output)

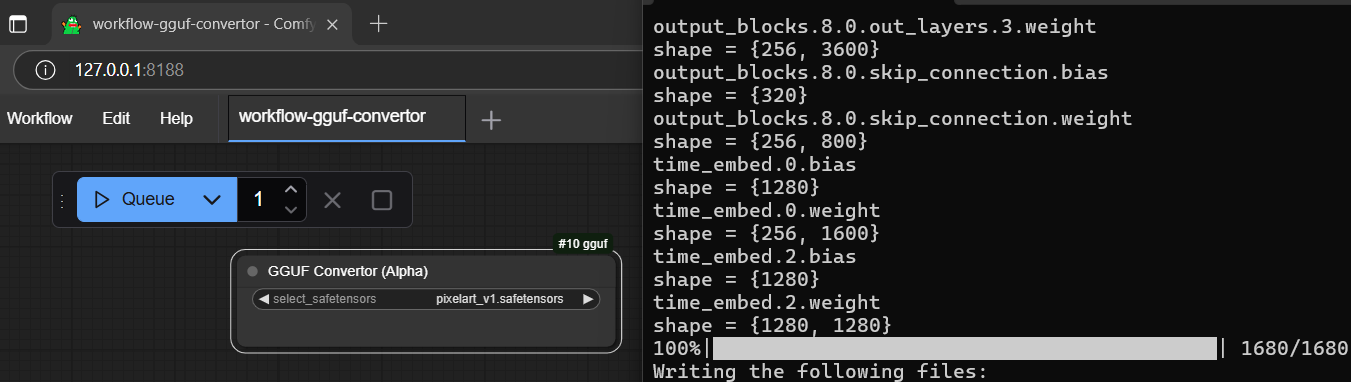

- drag safetensors file(s) to diffusion_models folder (./ComfyUI/models/diffusion_models)

- choose the second last option from the gguf menu:

GGUF Convertor (Alpha) - select your safetensors model inside the box; don't need to connect anything; it works independently also

- click

Queue(run); then you can simply check the processing progress from console - when it was done; the converted gguf file will be saved to the output folder (./ComfyUI/output)

little tips: to make a so-called

little tips: to make a so-called fast model; could try to cut the selected model (bf16) half (use cutter) first; and convert the trimmed model (fp8) to gguf (pretty much the same file size with the bf16 quantized output but less tensors inside; load faster theoretically, but no guarantee, you should test it probably, and might also be prepared for the significant quality loss)

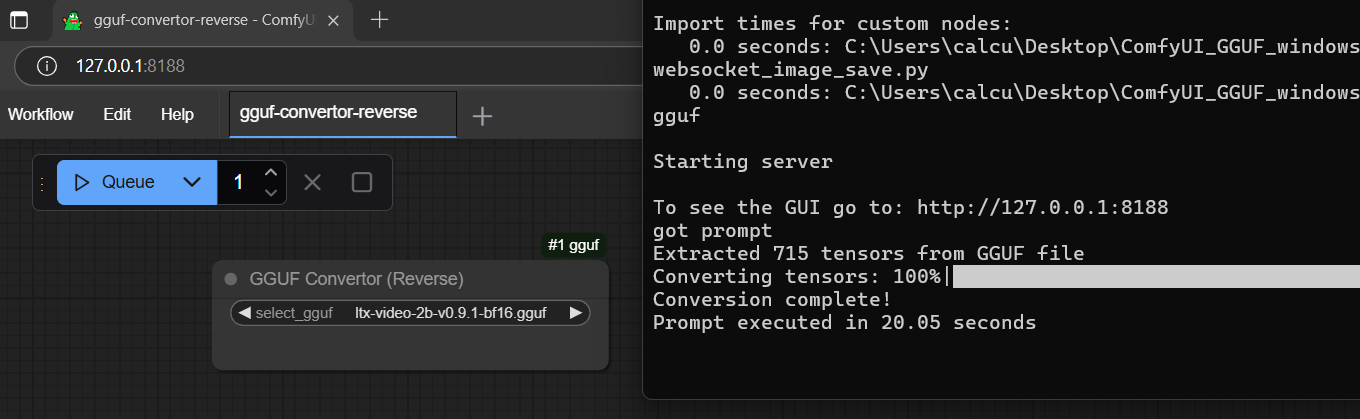

- drag gguf file(s) to diffusion_models folder (./ComfyUI/models/diffusion_models)

- choose the third last option from the gguf menu:

GGUF Convertor (Reverse) - select your gguf file inside the box; don't need to connect anything; it works independently as well

- click

Queue(run); then you can simply check the processing progress from console - when it was done; the converted safetensors file will be saved to the output folder (./ComfyUI/output)

little little tips: the reverse converted safetensors file doesn't contain any clip and vae (cannot be used as checkpoint); should drag it to diffusion_models folder (./ComfyUI/models/diffusion_models) and select Add Node > advanced > loaders > Load Diffusion Model; then use it like gguf model (very similar to gguf loader) along with separate clip(s) and vae

little little tips: the reverse converted safetensors file doesn't contain any clip and vae (cannot be used as checkpoint); should drag it to diffusion_models folder (./ComfyUI/models/diffusion_models) and select Add Node > advanced > loaders > Load Diffusion Model; then use it like gguf model (very similar to gguf loader) along with separate clip(s) and vae

comfyui confyui_vlm_nodes comfyui-gguf (special thanks city96) gguf-comfy gguf-connector testkit

node is a member of family gguf