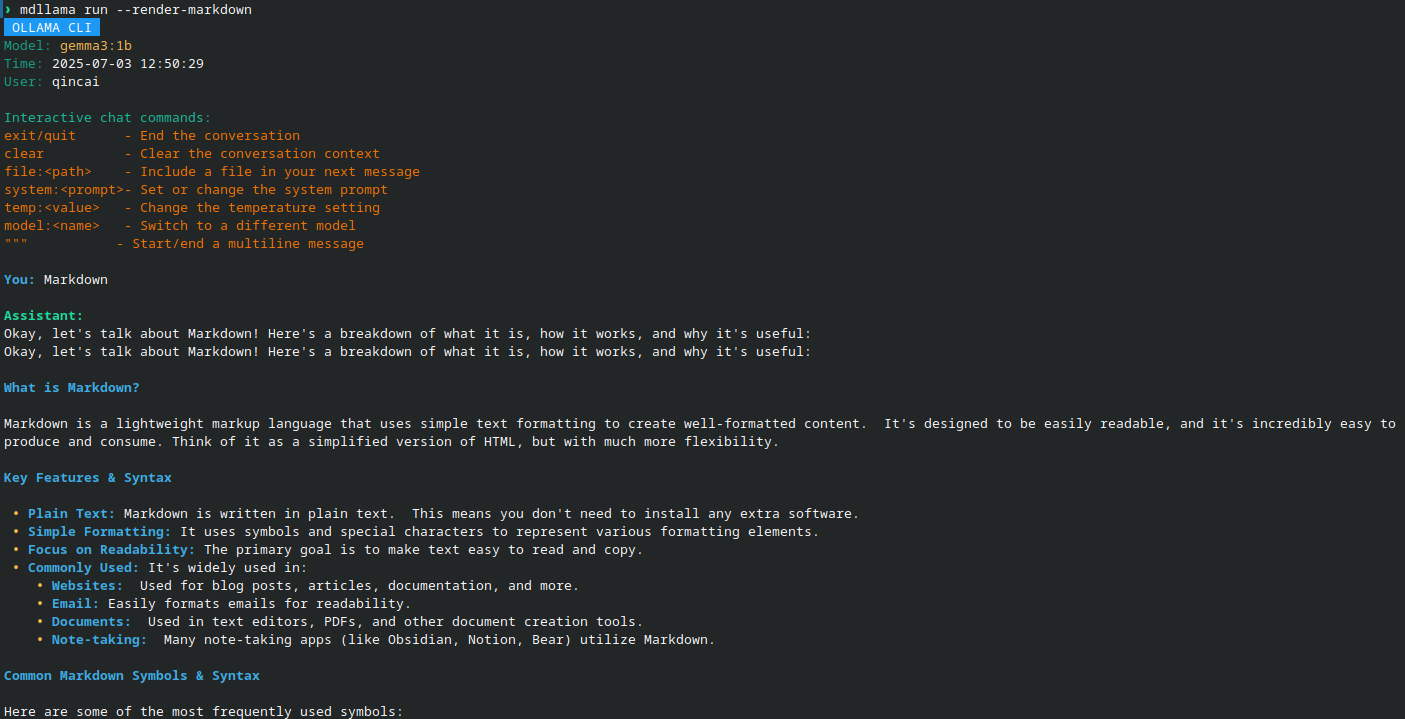

A CLI tool that lets you chat with Ollama and OpenAI models right from your terminal, with built-in Markdown rendering and websearch functionalities.

- Chat with Ollama models from the terminal

- Built-in Markdown rendering

- Web-search functionality

- Extremely simple installation and removal (see below)

When using mdllama run for interactive chat, you have access to special commands:

See man(1) mdllama for details.

man 1 mdllama- Ollama: Local models running on your machine

- OpenAI: Official OpenAI API (GPT-3.5, GPT-4, etc.)

- OpenAI-compatible: Any API that follows OpenAI's format (I like Groq, so use it! https://groq.com)

mdllama setup

# Or specify explicitly

mdllama setup --provider ollamamdllama setup --provider openai

# Will prompt for your OpenAI API keymdllama setup --provider openai --openai-api-base https://ai.hackclub.com

# Then provide your API key when prompted# Use with OpenAI

mdllama chat --provider openai "Explain quantum computing"

# Use with specific model and provider

mdllama run --provider openai --model gpt-4

# Interactive session with streaming

mdllama run --provider openai --stream=true --render-markdownConfigure the demo endpoint used by mdllama by running the setup flow and entering the API credentials below when prompted.

mdllama setup -p openai --openai-api-base https://ai.qincai.xyzWhen prompted, provide the following values:

- API key:

sk-proxy-7b8c9d0e1f2a3b4c5d6e7f8a9b0c1d2e

After setup you can run the CLI as usual, for example:

mdllama run -p openaiNote

Try asking the model to give you some markdown-formatted text, or test the web search features:

Give me a markdown-formatted text about the history of AI.search:Python 3.13(web search)site:python.org(fetch website content)websearch:What are the latest Python features?(AI-powered search)

So, try it out and see how it works!

-

Add the PPA to your sources list:

echo 'deb [trusted=yes] https://packages.qincai.xyz/debian stable main' | sudo tee /etc/apt/sources.list.d/qincai-ppa.list sudo apt update

-

Install mdllama:

sudo apt install python3-mdllama

-

Download the latest RPM from: https://packages.qincai.xyz/fedora/

Or, to install directly:

sudo dnf install https://packages.qincai.xyz/fedora/mdllama-<version>.noarch.rpm

Replace

<version>with the latest version number. -

(Optional, highly recommended) To enable as a repository for updates, create

/etc/yum.repos.d/qincai-ppa.repo:[qincai-ppa] name=Raymont's Personal RPMs baseurl=https://packages.qincai.xyz/fedora/ enabled=1 metadata_expire=0 gpgcheck=0

Then install with:

sudo dnf install mdllama

3, Install the ollama library from pip:

pip install ollamaYou can also install it globally with:

sudo pip install ollamaNote

The This issue has been resolved by including a post-installation script for RPM packages that automatically installs the ollama library is not installed by default in the RPM package since there is no system ollama package avaliable (python3-ollama). You need to install it manually using pip in order to use mdllama with Ollama models.ollama library using pip.

Install via pip (recommended for Windows/macOS and Python virtual environments):

pip install mdllamaWarning

This method of un-/installation is deprecated and shall be avoided

Please use the pip method, or use DEB/RPM packages instead

To install mdllama using the traditional bash script, run:

bash <(curl -fsSL https://raw.githubusercontent.com/QinCai-rui/mdllama/refs/heads/main/install.sh)To uninstall mdllama, run:

bash <(curl -fsSL https://raw.githubusercontent.com/QinCai-rui/mdllama/refs/heads/main/uninstall.sh)This project is licensed under the GNU General Public License v3.0. See the LICENSE file for details.