You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

To benchmark run the following batch file on Windows:

202

+

203

+

-`benchmark.bat` - To benchmark Pytorch

204

+

-`benchmark-openvino.bat` - To benchmark OpenVINO

205

+

206

+

Alternatively you can run benchmarks by passing `-b` command line argument in CLI mode.

207

+

158

208

## OpenVINO support

159

209

160

210

Thanks [deinferno](https://github.com/deinferno) for the OpenVINO model contribution.

@@ -174,12 +224,13 @@ You can directly use these models in FastSD CPU.

174

224

### Convert SD 1.5 models to OpenVINO LCM-LoRA fused models

175

225

176

226

We first creates LCM-LoRA baked in model,replaces the scheduler with LCM and then converts it into OpenVINO model. For more details check [LCM OpenVINO Converter](https://github.com/rupeshs/lcm-openvino-converter), you can use this tools to convert any StableDiffusion 1.5 fine tuned models to OpenVINO.

227

+

<aid="real-time-text-to-image"></a>

177

228

178

229

## Real-time text to image (EXPERIMENTAL)

179

230

180

231

We can generate real-time text to images using FastSD CPU.

181

232

182

-

**CPU (OpenVINO)**

233

+

__CPU (OpenVINO)__

183

234

184

235

Near real-time inference on CPU using OpenVINO, run the `start-realtime.bat` batch file and open the link in browser (Resolution : 512x512,Latency : 0.82s on Intel Core i7)

185

236

@@ -275,17 +326,16 @@ Use the medium size models (723 MB)(For example : <https://huggingface.co/comfya

275

326

276

327

## FastSD CPU on Windows

277

328

278

-

:exclamation:**You must have a working Python installation.(Recommended : Python 3.10 or 3.11 )**

329

+

:exclamation:__You must have a working Python installation.(Recommended : Python 3.10 or 3.11 )__

330

+

331

+

279

332

280

333

Clone/download this repo or download release.

281

334

282

-

###Installation

335

+

## Installation

283

336

284

337

- Double click `install.bat` (It will take some time to install,depending on your internet speed.)

285

-

286

-

### Run

287

-

288

-

You can run in desktop GUI mode or web UI mode.

338

+

- You can run in desktop GUI mode or web UI mode.

289

339

290

340

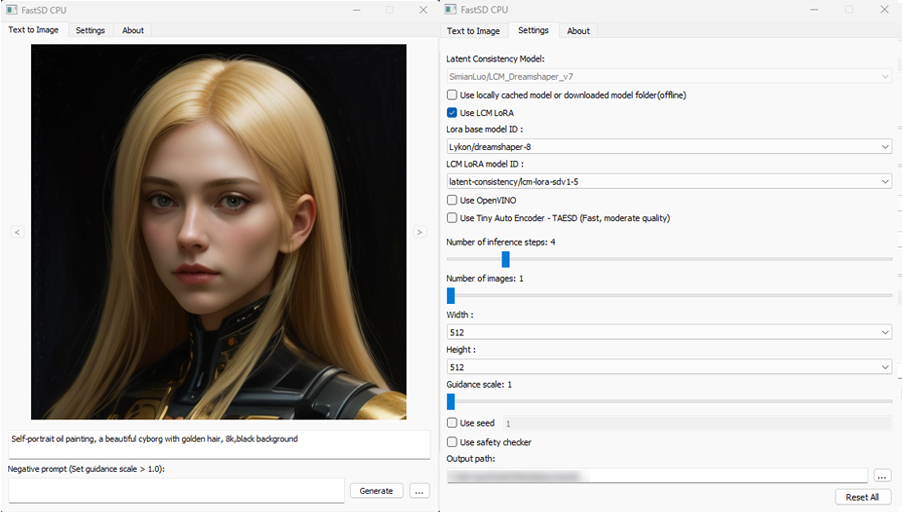

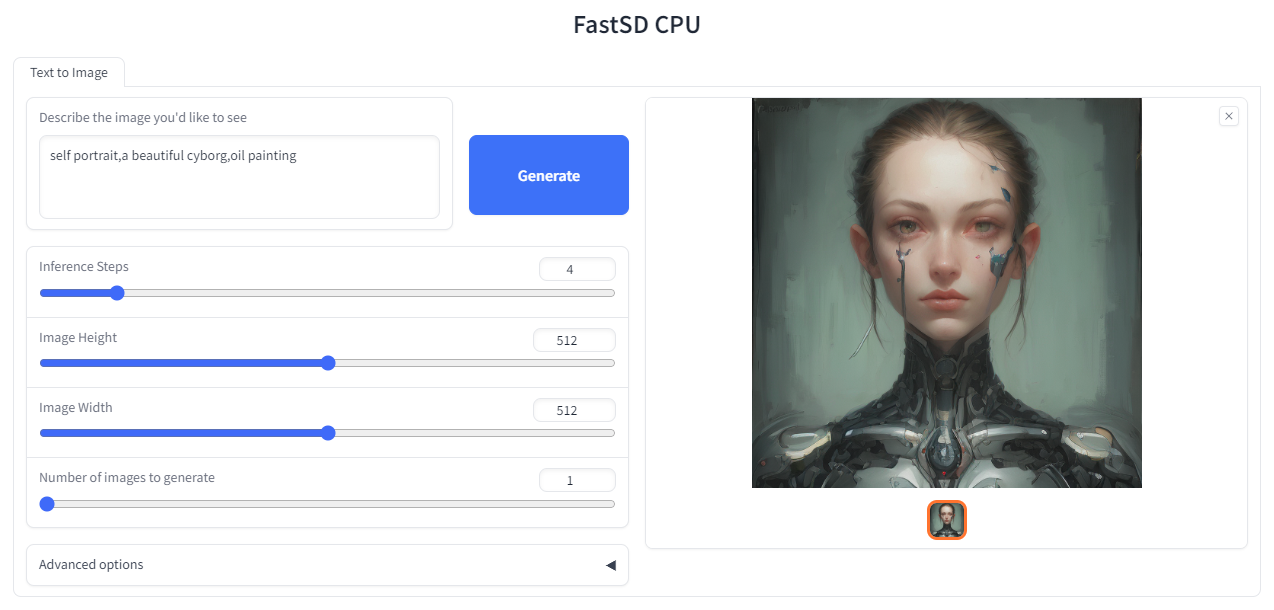

#### Desktop GUI

291

341

@@ -295,7 +345,7 @@ You can run in desktop GUI mode or web UI mode.

295

345

296

346

- To start web UI double click `start-webui.bat`

297

347

298

-

## FastSD CPU on Linux

348

+

###FastSD CPU on Linux

299

349

300

350

Ensure that you have Python 3.9 or 3.10 or 3.11 version installed.

301

351

@@ -315,11 +365,11 @@ Ensure that you have Python 3.9 or 3.10 or 3.11 version installed.

315

365

316

366

`./start-webui.sh`

317

367

318

-

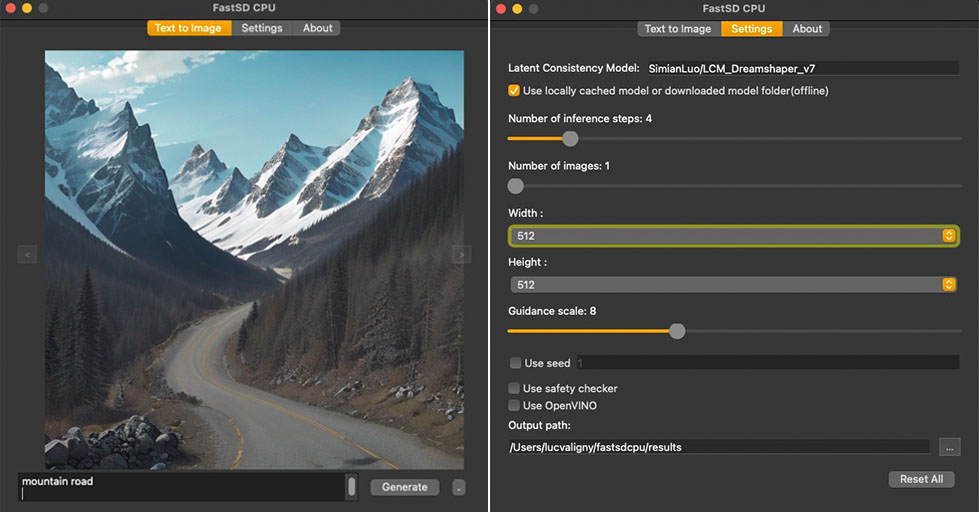

## FastSD CPU on Mac

368

+

###FastSD CPU on Mac

319

369

320

370

321

371

322

-

### Installation

372

+

####Installation

323

373

324

374

Ensure that you have Python 3.9 or 3.10 or 3.11 version installed.

325

375

@@ -347,33 +397,35 @@ If you want to increase image generation speed on Mac(M1/M2 chip) try this:

347

397

348

398

`export DEVICE=mps` and start app `start.sh`

349

399

350

-

## Web UI screenshot

400

+

####Web UI screenshot

351

401

352

402

353

403

354

-

## Google Colab

404

+

###Google Colab

355

405

356

406

Due to the limitation of using CPU/OpenVINO inside colab, we are using GPU with colab.

357

407

[](https://colab.research.google.com/drive/1SuAqskB-_gjWLYNRFENAkIXZ1aoyINqL?usp=sharing)

358

408

359

-

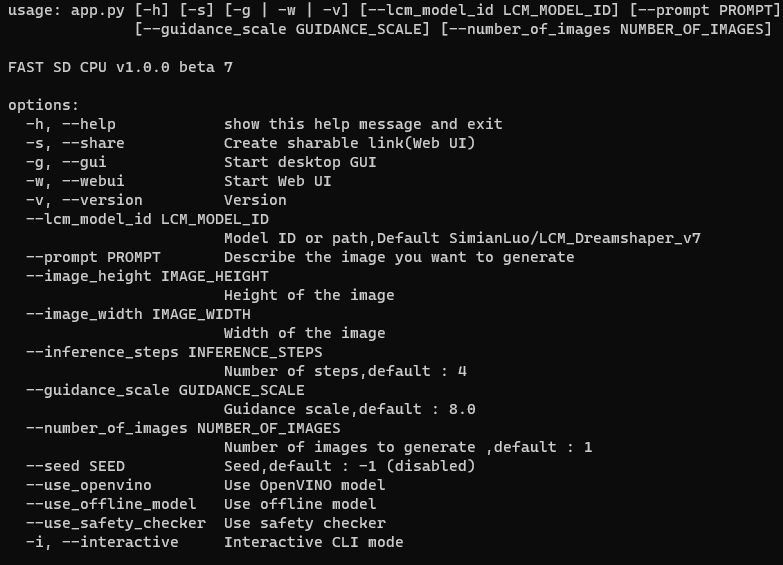

## CLI mode (Advanced users)

409

+

###CLI mode (Advanced users)

360

410

361

411

362

412

363

413

Open the terminal and enter into fastsdcpu folder.

364

414

Activate virtual environment using the command:

365

415

366

-

#### Windows users

416

+

#####Windows users

367

417

368

418

(Suppose FastSD CPU available in the directory "D:\fastsdcpu")

369

419

`D:\fastsdcpu\env\Scripts\activate.bat`

370

420

371

-

#### Linux users

421

+

#####Linux users

372

422

373

423

`source env/bin/activate`

374

424

375

425

Start CLI `src/app.py -h`

376

426

427

+

<aid="android"></a>

428

+

377

429

## Android (Termux + PRoot)

378

430

379

431

FastSD CPU running on Google Pixel 7 Pro.

@@ -400,20 +452,15 @@ Run the following command to install without Qt GUI.

400

452

401

453

Thanks [patienx](https://github.com/patientx) for this guide [Step by step guide to installing FASTSDCPU on ANDROID](https://github.com/rupeshs/fastsdcpu/discussions/123)

402

454

455

+

Another step by step guide to run FastSD on Android is [here](https://nolowiz.com/how-to-install-and-run-fastsd-cpu-on-android-temux-step-by-step-guide/)

456

+

457

+

<aid="raspberry"></a>

458

+

403

459

## Raspberry PI 4 support

404

460

405

461

Thanks WGNW_MGM for Raspberry PI 4 testing.FastSD CPU worked without problems.

406

462

System configuration - Raspberry Pi 4 with 4GB RAM, 8GB of SWAP memory.

407

463

408

-

## Benchmarking

409

-

410

-

To benchmark run the following batch file on Windows:

411

-

412

-

-`benchmark.bat` - To benchmark Pytorch

413

-

-`benchmark-openvino.bat` - To benchmark OpenVINO

414

-

415

-

Alternatively you can run benchmarks by passing `-b` command line argument in CLI mode.

416

-

417

464

## Known issues

418

465

419

466

- TAESD will not work with OpenVINO image to image workflow

0 commit comments