-

Notifications

You must be signed in to change notification settings - Fork 7

A1. Introduction to Reservoir Computing

Reservoir Computing is a general concept for computation based on recurrent neural network. Recurrent neural networks (RNN) are very well suited for time-dependent data processing such as

-

Time series forecasting (univariate, multivariate)

- Based on historical curve, forecast its next evolution

- Based on real time micro-movements of the car in the parallel lane, what is the probability that a driver will turn this car into my lane in the next 3 seconds?

- ...

-

Time series classification (univariate, multivariate)

- Based on EEG data, recognize coming epileptic seizure

- Based on voice data, recognize speaker's emotion

- ...

Reservoir computing makes it possible to use the benefits of the RNN very efficiently. Efficiency lies in the fact that the weights of synapses within the RNN (called reservoir) are randomly chosen at the beginning and in contrast with traditional training methods for RNNs, there is no need of any further expensive supervised training of the RNN to find weights solving given problem. Only the Readout layer needs to be trained. Most often very simply, by fast linear regression.

- External input data is continuously pushed into the reservoir through input neurons (yellow balls). Input neuron is very simple, it only mediates external input for input synapses (yellow arrows) delivering input to the reservoir's hidden neurons (blue balls interconnected by blue arrows). Each pushed input data starts recomputation cycle of the reservoir.

- During the recomputation cycle is computed new state of each hidden neuron. Activation function of the hidden neuron gets the summed weighted outputs from connected input neurons and other hidden neurons and computes the new state and output of the hidden neuron. Randomly recurrently connected hidden neurons thus do the nonlinear transformation of the input and provide rich dynamics of hidden neuron states. After the recomputation cycle, the historical and current input data are described by the current state of the reservoir hidden neurons.

- Hidden neurons provide so-called predictors. Predictors are periodically collected (mostly after each recomputation cycle) and sent to the readout layer to compute desired output.

Synapse always interconnects two neurons and does the unidirectional transmission of weighted signal from source (presynaptic) neuron to target (postsynaptic) neuron. If the signal is always weighted by a constant weight, we are talking about a static synapse. If the weight changes over the time depending on dynamics of connected neurons, we are talking about a dynamic synapse. Synapse can also delay the signal, usually proportionally to the length of the synapse.

Hidden neuron is a small computing unit that processes the input (stimulation) and produces an output. The way neuron processes its input to output is defined by so-called Activation function. Hidden neuron can be simply understood as the envelope of its Activation function, where the neuron provides necessary interface and the Activation function performs the core calculations. Activation functions (and therefore also hidden neurons) are distinguished into two types: Analog and Spiking. For a better insight into the activation functions, look at the wiki pages.

Analog activation function has no similarity to behavior of the biological neuron and is always stateless. It produces the continuous analog output depending only on the current input and particular transformation equation (usually non-linear). A typical example of the analog activation function is TanH (hyperbolic tangent), which non-linearly transforms an input to an output in range (-1, 1).

Spiking activation function attempts to simulate the behavior of a biological neuron and usually implements one of the so-called "Integrate and Fire" neuron models. It accumulates (integrates) input stimulation on its membrane potential. The behavior of membrane under stimulation is usually defined by one or more ordinary differential equations. When the membrane potential threshold is exceeded, fires a short pulse (spike), resets membrane potential and the cycle starts from the beginning. For a better insight into the biological neuron models, look at the wiki pages.

It is obvious that spiking activation function is time-dependent and must remember its previous state.

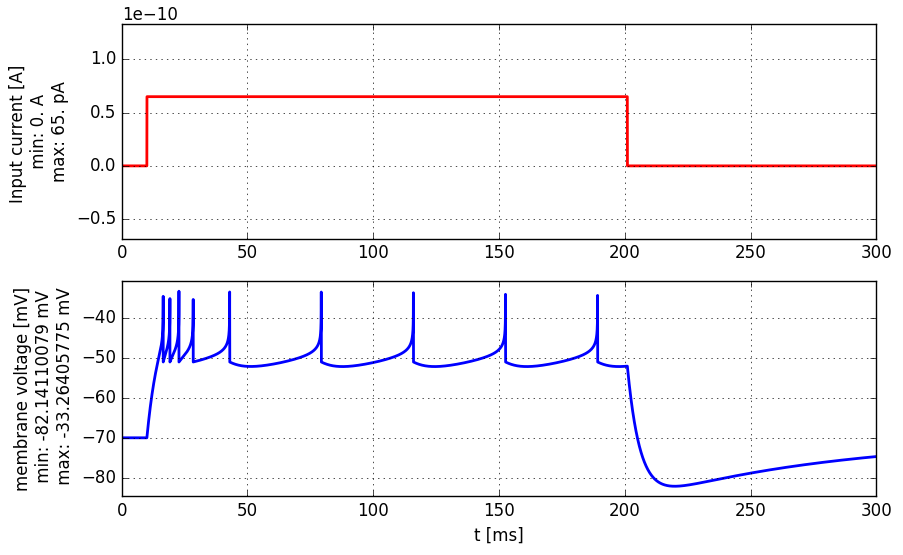

The following figure illustrates the progress of membrane potential under constant stimulation. The figure is from the great online book Neuronal Dynamics and shows the behavior of the "Adaptive Exponential Integrate and Fire" model.

Note that the membrane potential (blue line) is the state but not the output. Spiking activation output is binary. Here has mostly value of 0 and only at time points where membrane potential exceeds the firing threshold -40 mV it has value of 1 (fired spike).

- Reservoir typically contains hundreds (and sometimes thousands) of randomly connected hidden neurons.

- The influence of historical input data on the current states of hidden neurons is weakening in time. The memory capacity of the reservoir depends on several aspects, such as the number of hidden neurons and their type, the density of the interconnection, synaptic delays, ...

- The rich reservoir dynamics allows to train readout layer to map the same predictors to the different desired outputs at the same time.

Readout layer receives the predictors and computes one or more desired outputs. It has to be trained using one of the so-called supervised training method. The most commonly used technique is linear regression where linear coefficients are searched that best directly map the predictors to the desired output.

Questions, ideas, suggestions for improvement and constructive comments are welcome at my email address [email protected] or newly you can use github discussions.