Most CPU threads are idle with multi-GPU and multi-dataloader training #10963

Unanswered

fuy34

asked this question in

DDP / multi-GPU / multi-node

Replies: 1 comment 1 reply

-

|

Dear @fuy34, Yes, something looks wrong. Would you mind sharing some reproducible script we could use to debug this behavior. All processes should create 6 workers to load the data. |

Beta Was this translation helpful? Give feedback.

1 reply

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Uh oh!

There was an error while loading. Please reload this page.

-

I am training a multi-task model with 4 GPUs

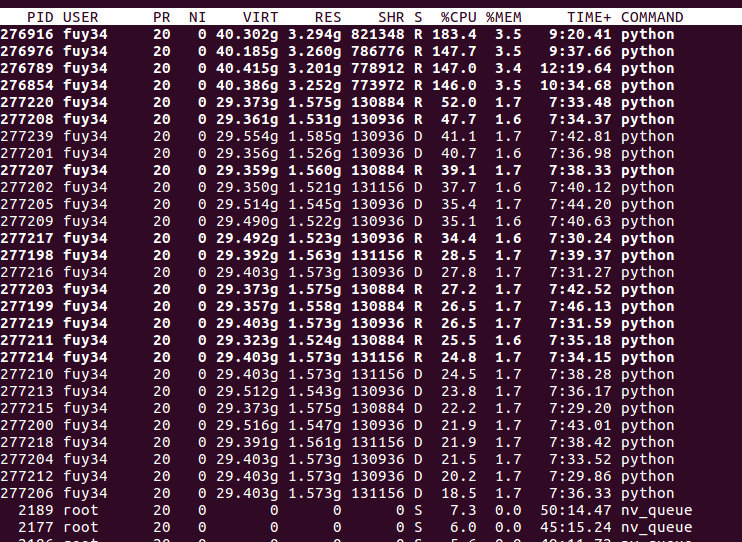

distributed_backend='ddp', and I setnum_workers=6for Dataloader.Based on my understanding, each GPU process will be assigned with 6 dataloaders, and they are supposed to perform data loading and augmentation simultaneously. But I found in the most of time, most of CPU threads are idle, and it seems only one main threads is working heavily (shown in the screenshot below).

My dataset is fairly large (~1TB) and contains lots of small files (images, depth map, instance segmentation, etc). I also have a customized data augmentation performs random scaling, flipping and cropping. The training speed is fast in the first 100 - 200 steps, and becomes 4-5 times slower after that. The GPU usage frequently stay 0% to wait the data. I am not sure if this is a general pytorch issue or related to pytorch-lightning specifically.

Is there any way to make all CPU threads active for the data loading? Thank you in advance!

Beta Was this translation helpful? Give feedback.

All reactions