The Benchmark for 6D Object Pose Estimation (BOP) [1] aims at capturing the state of the art in estimating the 6D pose, i.e. 3D translation and 3D rotation, of rigid objects from RGB/RGB-D images. The benchmark is primarily focused on the scenario where only the 3D object models, which can be used to render synthetic training images, are available at training time. Whereas capturing and annotating real training images requires a significant effort, the 3D object models are often available or can be generated at a low cost using KinectFusion-like systems for 3D surface reconstruction.

While learning from synthetic data has been common for depth-based pose estimation methods, the same is still difficult for RGB-based methods where the domain gap between synthetic training and real test images is more severe. Specifically for the benchmark, the BlenderProc [3] team and BOP organizers [1] have therefore joined forces and prepared BlenderProc4BOP, an open-source, light-weight, procedural and photorealistic (PBR) renderer. The renderer was used to render 50K training images for each of the seven core datasets of the BOP Challenge 2020 (LM, T-LESS, YCB-V, ITODD, HB, TUD-L, IC-BIN). The images can be downloaded from the website with BOP datasets.

BlenderProc4BOP saves all generated data in the BOP format, which allows using the visualization and utility functions from the BOP toolkit.

Users of BlenderProc4BOP are encouraged to build on top of it and release their extensions.

Recent works [2,4] have shown that physically-based rendering and realistic object arrangement help to reduce the synthetic-to-real domain gap in object detection and pose estimation. In the following, we give an overview on the synthesis approach implemented in BlenderProc4BOP. Detailed explanations can be found in the examples.

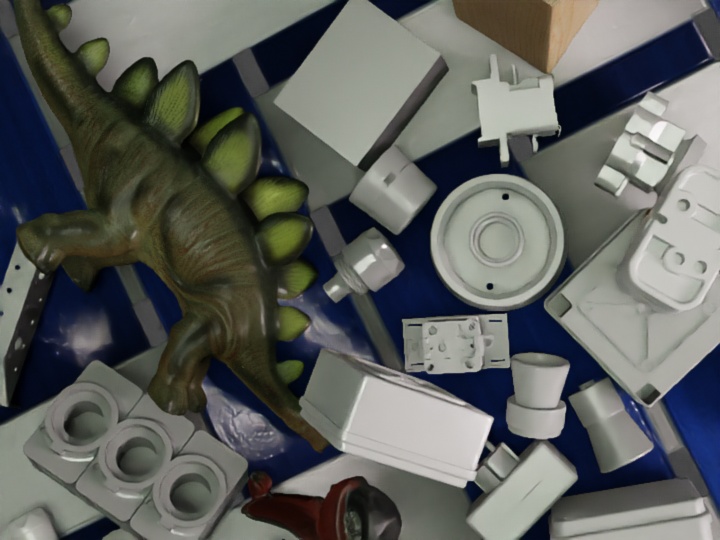

Objects from the selected BOP dataset are arranged inside an empty room, with objects from other BOP datasets used as distractors. To achieve a rich spectrum of generated images, a random PBR material from the CC0 Textures library is assigned to the walls of the room, and light with a random strength and color is emitted from the room ceiling and from a randomly positioned point light source. This simple setup keeps the computational load low (1-4 seconds per image; 50K images can be rendered on 5 GPU's overnight).

Instead of trying to perfectly model the object materials, the object materials are randomized. Realistic object poses are achieved by dropping objects on the ground plane using the PyBullet physics engine integrated in Blender. This allows to create dense but shallow piles that introduce various levels of occlusion. When rendering training images for the LM dataset, where the objects are always standing upright in test images, we place the objects in upright poses on the ground plane.

The cameras are positioned to cover the distribution of the ground-truth object poses in test images (given by the range of azimuth angles, elevation angles and distances of objects from the camera – provided in file dataset_params.py in the BOP toolkit).

- bop_challenge: Configuration files and information on how the official synthetic data for the BOP Challenge 2020 were created.

- bop_object_physics_positioning: Drops BOP objects on a plane and randomizes materials.

- bop_object_on_surface_sampling: Samples upright poses on a plane and randomizes materials.

- bop_scene_replication: Replicates test scenes (object poses, camera intrinsics and extrinsics) from the BOP datasets.

- bop_object_pose_sampling: Loads BOP objects and samples the camera, light poses and object poses in a free space.

Results of the BOP Challenge 2020 and the superiority of training with BlenderProc images over ordinary OpenGL images is shown in our paper BOP Challenge 2020 on 6D Object Localization [5].

[1] Hodaň, Michel et al.: BOP: Benchmark for 6D Object Pose Estimation, ECCV 2018.

[2] Hodaň et al.: Photorealistic Image Synthesis for Object Instance Detection, ICIP 2019.

[3] Denninger, Sundermeyer et al.: BlenderProc, arXiv 2019.

[4] Pitteri, Ramamonjisoa et al.: On Object Symmetries and 6D Pose Estimation from Images, CVPR 2020.

[5] Hodan, Sundermeyer et al.: BOP Challenge 2020 on 6D Object Localization, ECCVW2020